This guide serves as a introduction to several key entities that can be managed with Apache Polaris (Incubating), describes how to build and deploy Polaris locally, and finally includes examples of how to use Polaris with Apache Spark™.

This guide covers building Polaris, deploying it locally or via Docker, and interacting with it using the command-line interface and Apache Spark. Before proceeding with Polaris, be sure to satisfy the relevant prerequisites listed here.

Building and Deploying Polaris

To get the latest Polaris code, you'll need to clone the repository using git. You can install git using homebrew:

brew install git

Then, use git to clone the Polaris repo:

cd ~

git clone https://github.com/apache/polaris.git

With Docker

If you plan to deploy Polaris inside Docker, you'll need to install docker itself. For example, this can be done using homebrew:

brew install --cask docker

Once installed, make sure Docker is running.

From Source

If you plan to build Polaris from source yourself, you will need to satisfy a few prerequisites first.

Polaris is built using gradle and is compatible with Java 21. We recommend the use of jenv to manage multiple Java versions. For example, to install Java 21 via homebrew and configure it with jenv:

cd ~/polaris

brew install openjdk@21 jenv

jenv add $(brew --prefix openjdk@21)

jenv local 21

Connecting to Polaris

Polaris is compatible with any Apache Iceberg client that supports the REST API. Depending on the client you plan to use, refer to the prerequisites below.

With Spark

If you want to connect to Polaris with Apache Spark, you'll need to start by cloning Spark. As above, make sure git is installed first. You can install it with homebrew:

brew install git

Then, clone Spark and check out a versioned branch. This guide uses Spark 3.5.

cd ~

git clone https://github.com/apache/spark.git

cd ~/spark

git checkout branch-3.5

Polaris can be deployed via a lightweight docker image or as a standalone process. Before starting, be sure that you've satisfied the relevant prerequisites detailed above.

Docker Image

To start using Polaris in Docker, launch Polaris while Docker is running:

cd ~/polaris

docker compose -f docker-compose.yml up --build

Once the polaris-polaris container is up, you can continue to Defining a Catalog.

Building Polaris

Run Polaris locally with:

cd ~/polaris

./gradlew runApp

You should see output for some time as Polaris builds and starts up. Eventually, you won’t see any more logs and should see messages that resemble the following:

INFO [...] [main] [] o.e.j.s.handler.ContextHandler: Started i.d.j.MutableServletContextHandler@...

INFO [...] [main] [] o.e.j.server.AbstractConnector: Started application@...

INFO [...] [main] [] o.e.j.server.AbstractConnector: Started admin@...

INFO [...] [main] [] o.eclipse.jetty.server.Server: Started Server@...

At this point, Polaris is running.

For this tutorial, we'll launch an instance of Polaris that stores entities only in-memory. This means that any entities that you define will be destroyed when Polaris is shut down. It also means that Polaris will automatically bootstrap itself with root credentials. For more information on how to configure Polaris for production usage, see the docs.

When Polaris is launched using in-memory mode the root principal credentials can be found in stdout on initial startup. For example:

realm: default-realm root principal credentials: <client-id>:<client-secret>

Be sure to note of these credentials as we'll be using them below. You can also set these credentials as environment variables for use with the Polaris CLI:

export CLIENT_ID=<client-id>

export CLIENT_SECRET=<client-secret>

In Polaris, the catalog is the top-level entity that objects like tables and views are organized under. With a Polaris service running, you can create a catalog like so:

cd ~/polaris

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

catalogs \

create \

--storage-type s3 \

--default-base-location ${DEFAULT_BASE_LOCATION} \

--role-arn ${ROLE_ARN} \

quickstart_catalog

This will create a new catalog called quickstart_catalog.

The DEFAULT_BASE_LOCATION you provide will be the default location that objects in this catalog should be stored in, and the ROLE_ARN you provide should be a Role ARN with access to read and write data in that location. These credentials will be provided to engines reading data from the catalog once they have authenticated with Polaris using credentials that have access to those resources.

If you’re using a storage type other than S3, such as Azure, you’ll provide a different type of credential than a Role ARN. For more details on supported storage types, see the docs.

Additionally, if Polaris is running somewhere other than localhost:8181, you can specify the correct hostname and port by providing --host and --port flags. For the full set of options supported by the CLI, please refer to the docs.

Creating a Principal and Assigning it Privileges

With a catalog created, we can create a principal that has access to manage that catalog. For details on how to configure the Polaris CLI, see the section above or refer to the docs.

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

principals \

create \

quickstart_user

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

principal-roles \

create \

quickstart_user_role

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

catalog-roles \

create \

--catalog quickstart_catalog \

quickstart_catalog_role

Be sure to provide the necessary credentials, hostname, and port as before.

When the principals create command completes successfully, it will return the credentials for this new principal. Be sure to note these down for later. For example:

./polaris ... principals create example

{"clientId": "XXXX", "clientSecret": "YYYY"}

Now, we grant the principal the principal role we created, and grant the catalog role the principal role we created. For more information on these entities, please refer to the linked documentation.

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

principal-roles \

grant \

--principal quickstart_user \

quickstart_user_role

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

catalog-roles \

grant \

--catalog quickstart_catalog \

--principal-role quickstart_user_role \

quickstart_catalog_role

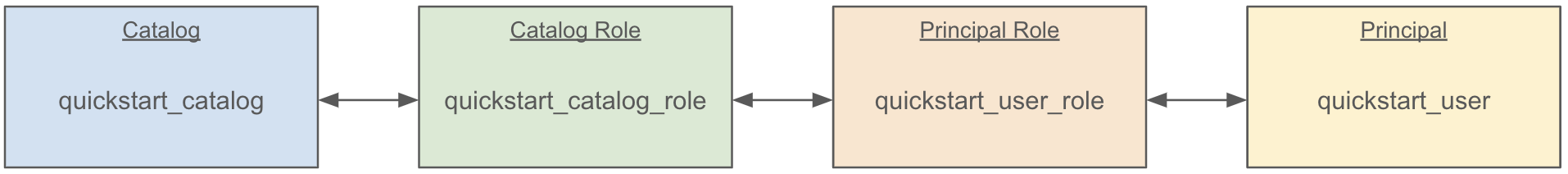

Now, we’ve linked our principal to the catalog via roles like so:

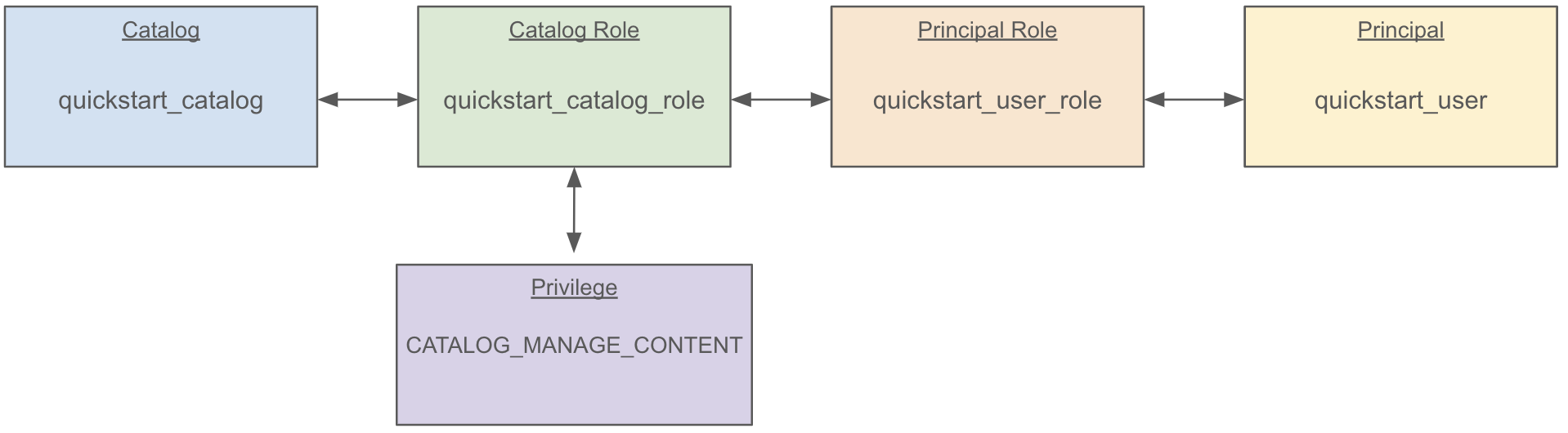

In order to give this principal the ability to interact with the catalog, we must assign some privileges. For the time being, we will give this principal the ability to fully manage content in our new catalog. We can do this with the CLI like so:

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

privileges \

catalog \

grant \

--catalog quickstart_catalog \

--catalog-role quickstart_catalog_role \

CATALOG_MANAGE_CONTENT

This grants the catalog privileges CATALOG_MANAGE_CONTENT to our catalog role, linking everything together like so:

CATALOG_MANAGE_CONTENT has create/list/read/write privileges on all entities within the catalog. The same privilege could be granted to a namespace, in which case the principal could create/list/read/write any entity under that namespace.

At this point, we’ve created a principal and granted it the ability to manage a catalog. We can now use an external engine to assume that principal, access our catalog, and store data in that catalog using Apache Iceberg.

Connecting with Spark

To use a Polaris-managed catalog in Apache Spark, we can configure Spark to use the Iceberg catalog REST API.

This guide uses Apache Spark 3.5, but be sure to find the appropriate iceberg-spark package for your Spark version. From a local Spark clone on the branch-3.5 branch we can run the following:

Note: the credentials provided here are those for our principal, not the root credentials.

bin/spark-shell \

--packages org.apache.iceberg:iceberg-spark-runtime-3.5_2.12:1.5.2,org.apache.hadoop:hadoop-aws:3.4.0 \

--conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions \

--conf spark.sql.catalog.quickstart_catalog.warehouse=quickstart_catalog \

--conf spark.sql.catalog.quickstart_catalog.header.X-Iceberg-Access-Delegation=vended-credentials \

--conf spark.sql.catalog.quickstart_catalog=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.quickstart_catalog.catalog-impl=org.apache.iceberg.rest.RESTCatalog \

--conf spark.sql.catalog.quickstart_catalog.uri=http://localhost:8181/api/catalog \

--conf spark.sql.catalog.quickstart_catalog.credential='XXXX:YYYY' \

--conf spark.sql.catalog.quickstart_catalog.scope='PRINCIPAL_ROLE:ALL' \

--conf spark.sql.catalog.quickstart_catalog.token-refresh-enabled=true

Replace XXXX and YYYY with the client ID and client secret generated when you created the quickstart_user principal.

Similar to the CLI commands above, this configures Spark to use the Polaris running at localhost:8181. If your Polaris server is running elsewhere, but sure to update the configuration appropriately.

Finally, note that we include the hadoop-aws package here. If your table is using a different filesystem, be sure to include the appropriate dependency.

Once the Spark session starts, we can create a namespace and table within the catalog:

spark.sql("USE quickstart_catalog")

spark.sql("CREATE NAMESPACE IF NOT EXISTS quickstart_namespace")

spark.sql("CREATE NAMESPACE IF NOT EXISTS quickstart_namespace.schema")

spark.sql("USE NAMESPACE quickstart_namespace.schema")

spark.sql("""

CREATE TABLE IF NOT EXISTS quickstart_table (

id BIGINT, data STRING

)

USING ICEBERG

""")

We can now use this table like any other:

spark.sql("INSERT INTO quickstart_table VALUES (1, 'some data')")

spark.sql("SELECT * FROM quickstart_table").show(false)

. . .

+---+---------+

|id |data |

+---+---------+

|1 |some data|

+---+---------+

If at any time access is revoked...

./polaris \

--client-id ${CLIENT_ID} \

--client-secret ${CLIENT_SECRET} \

privileges \

catalog \

revoke \

--catalog quickstart_catalog \

--catalog-role quickstart_catalog_role \

CATALOG_MANAGE_CONTENT

Spark will lose access to the table:

spark.sql("SELECT * FROM quickstart_table").show(false)

org.apache.iceberg.exceptions.ForbiddenException: Forbidden: Principal 'quickstart_user' with activated PrincipalRoles '[]' and activated ids '[6, 7]' is not authorized for op LOAD_TABLE_WITH_READ_DELEGATION

Apache Polaris (Incubating) is a catalog implementation for Apache Iceberg™ tables and is built on the open source Apache Iceberg™ REST protocol.

With Polaris, you can provide centralized, secure read and write access to your Iceberg tables across different REST-compatible query engines.

This section introduces key concepts associated with using Apache Polaris (Incubating).

In the following diagram, a sample Apache Polaris (Incubating) structure with nested namespaces is shown for Catalog1. No tables or namespaces have been created yet for Catalog2 or Catalog3.

Catalog

In Polaris, you can create one or more catalog resources to organize Iceberg tables.

Configure your catalog by setting values in the storage configuration for S3, Azure, or Google Cloud Storage. An Iceberg catalog enables a query engine to manage and organize tables. The catalog forms the first architectural layer in the Apache Iceberg™ table specification and must support the following tasks:

Storing the current metadata pointer for one or more Iceberg tables. A metadata pointer maps a table name to the location of that table's current metadata file.

Performing atomic operations so that you can update the current metadata pointer for a table to the metadata pointer of a new version of the table.

To learn more about Iceberg catalogs, see the Apache Iceberg™ documentation.

Catalog types

A catalog can be one of the following two types:

Internal: The catalog is managed by Polaris. Tables from this catalog can be read and written in Polaris.

External: The catalog is externally managed by another Iceberg catalog provider (for example, Snowflake, Glue, Dremio Arctic). Tables from this catalog are synced to Polaris. These tables are read-only in Polaris. In the current release, only a Snowflake external catalog is provided.

A catalog is configured with a storage configuration that can point to S3, Azure storage, or GCS.

Namespace

You create namespaces to logically group Iceberg tables within a catalog. A catalog can have multiple namespaces. You can also create nested namespaces. Iceberg tables belong to namespaces.

Apache Iceberg™ tables and catalogs

In an internal catalog, an Iceberg table is registered in Polaris, but read and written via query engines. The table data and metadata is stored in your external cloud storage. The table uses Polaris as the Iceberg catalog.

If you have tables housed in another Iceberg catalog, you can sync these tables to an external catalog in Polaris. If you sync this catalog to Polaris, it appears as an external catalog in Polaris. Clients connecting to the external catalog can read from or write to these tables. However, clients connecting to Polaris will only be able to read from these tables.

Important

For the access privileges defined for a catalog to be enforced correctly, the following conditions must be met:

- The directory only contains the data files that belong to a single table.

- The directory hierarchy matches the namespace hierarchy for the catalog.

For example, if a catalog includes the following items:

- Top-level namespace namespace1

- Nested namespace namespace1a

- A customers table, which is grouped under nested namespace namespace1a

- An orders table, which is grouped under nested namespace namespace1a

The directory hierarchy for the catalog must follow this structure:

- /namespace1/namespace1a/customers/<files for the customers table *only*>

- /namespace1/namespace1a/orders/<files for the orders table *only*>

Service principal

A service principal is an entity that you create in Polaris. Each service principal encapsulates credentials that you use to connect to Polaris.

Query engines use service principals to connect to catalogs.

Polaris generates a Client ID and Client Secret pair for each service principal.

The following table displays example service principals that you might create in Polaris:

| Service connection name | Purpose |

|---|---|

| Flink ingestion | For Apache Flink® to ingest streaming data into Apache Iceberg™ tables. |

| Spark ETL pipeline | For Apache Spark™ to run ETL pipeline jobs on Iceberg tables. |

| Snowflake data pipelines | For Snowflake to run data pipelines for transforming data in Apache Iceberg™ tables. |

| Trino BI dashboard | For Trino to run BI queries for powering a dashboard. |

| Snowflake AI team | For Snowflake to run AI jobs on data in Apache Iceberg™ tables. |

Service connection

A service connection represents a REST-compatible engine (such as Apache Spark™, Apache Flink®, or Trino) that can read from and write to Polaris Catalog. When creating a new service connection, the Polaris administrator grants the service principal that is created with the new service connection either a new or existing principal role. A principal role is a resource in Polaris that you can use to logically group Polaris service principals together and grant privileges on securable objects. For more information, see Principal role. Polaris uses a role-based access control (RBAC) model to grant service principals access to resources. For more information, see Access control. For a diagram of this model, see RBAC model.

If the Polaris administrator grants the service principal for the new service connection a new principal role, the service principal doesn't have any privileges granted to it yet. When securing the catalog that the new service connection will connect to, the Polaris administrator grants privileges to catalog roles and then grants these catalog roles to the new principal role. As a result, the service principal for the new service connection has these privileges. For more information about catalog roles, see Catalog role.

If the Polaris administrator grants an existing principal role to the service principal for the new service connection, the service principal has the same privileges granted to the catalog roles that are granted to the existing principal role. If needed, the Polaris administrator can grant additional catalog roles to the existing principal role or remove catalog roles from it to adjust the privileges bestowed to the service principal. For an example of how RBAC works in Polaris, see RBAC example.

Storage configuration

A storage configuration stores a generated identity and access management (IAM) entity for your external cloud storage and is created when you create a catalog. The storage configuration is used to set the values to connect Polaris to your cloud storage. During the catalog creation process, an IAM entity is generated and used to create a trust relationship between the cloud storage provider and Polaris Catalog.

When you create a catalog, you supply the following information about your external cloud storage:

| Cloud storage provider | Information |

|---|---|

| Amazon S3 |

|

| Google Cloud Storage (GCS) |

|

| Azure |

|

In the following example workflow, Bob creates an Apache Iceberg™ table named Table1 and Alice reads data from Table1.

Bob uses Apache Spark™ to create the Table1 table under the Namespace1 namespace in the Catalog1 catalog and insert values into Table1.

Bob can create Table1 and insert data into it because he is using a service connection with a service principal that has the privileges to perform these actions.

Alice uses Trino to read data from Table1.

Alice can read data from Table1 because she is using a service connection with a service principal that has the privileges to perform this action.

This section describes security and access control.

Credential vending

To secure interactions with service connections, Polaris vends temporary storage credentials to the query engine during query execution. These credentials allow the query engine to run the query without requiring access to your external cloud storage for Iceberg tables. This process is called credential vending.

Identity and access management (IAM)

Polaris uses the identity and access management (IAM) entity to securely connect to your storage for accessing table data, Iceberg metadata, and manifest files that store the table schema, partitions, and other metadata. Polaris retains the IAM entity for your storage location.

Access control

Polaris enforces the access control that you configure across all tables registered with the service and governs security for all queries from query engines in a consistent manner.

Polaris uses a role-based access control (RBAC) model that lets you centrally configure access for Polaris service principals to catalogs, namespaces, and tables.

Polaris RBAC uses two different role types to delegate privileges:

Principal roles: Granted to Polaris service principals and analogous to roles in other access control systems that you grant to service principals.

Catalog roles: Configured with certain privileges on Polaris catalog resources and granted to principal roles.

For more information, see Access control.

Apache®, Apache Iceberg™, Apache Spark™, Apache Flink®, and Flink® are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries.

This page documents various entities that can be managed in Apache Polaris (Incubating).

A catalog is a top-level entity in Polaris that may contain other entities like namespaces and tables. These map directly to Apache Iceberg catalogs.

For information on managing catalogs with the REST API or for more information on what data can be associated with a catalog, see the API docs.

Storage Type

All catalogs in Polaris are associated with a storage type. Valid Storage Types are S3, Azure, and GCS. The FILE type is also additionally available for testing. Each of these types relates to a different storage provider where data within the catalog may reside. Depending on the storage type, various other configurations may be set for a catalog including credentials to be used when accessing data inside the catalog.

For details on how to use Storage Types in the REST API, see the API docs.

A namespace is a logical entity that resides within a catalog and can contain other entities such as tables or views. Some other systems may refer to namespaces as schemas or databases.

In Polaris, namespaces can be nested. For example, a.b.c.d.e.f.g is a valid namespace. b is said to reside within a, and so on.

For information on managing namespaces with the REST API or for more information on what data can be associated with a namespace, see the API docs.

Polaris tables are entities that map to Apache Iceberg tables.

For information on managing tables with the REST API or for more information on what data can be associated with a table, see the API docs.

Polaris views are entities that map to Apache Iceberg views.

For information on managing views with the REST API or for more information on what data can be associated with a view, see the API docs.

Polaris principals are unique identities that can be used to represent users or services. Each principal may have one or more principal roles assigned to it for the purpose of accessing catalogs and the entities within them.

For information on managing principals with the REST API or for more information on what data can be associated with a principal, see the API docs.

Polaris principal roles are labels that may be granted to principals. Each principal may have one or more principal roles, and the same principal role may be granted to multiple principals. Principal roles may be assigned based on the persona or responsibilities of a given principal, or on how that principal will need to access different entities within Polaris.

For information on managing principal roles with the REST API or for more information on what data can be associated with a principal role, see the API docs.

Polaris catalog roles are labels that may be granted to catalogs. Each catalog may have one or more catalog roles, and the same catalog role may be granted to multiple catalogs. Catalog roles may be assigned based on the nature of data that will reside in a catalog, or by the groups of users and services that might need to access that data.

Each catalog role may have multiple privileges granted to it, and each catalog role can be granted to one or more principal roles. This is the mechanism by which principals are granted access to entities inside a catalog such as namespaces and tables.

Polaris privileges are granted to catalog roles in order to grant principals with a given principal role some degree of access to catalogs with a given catalog role. When a privilege is granted to a catalog role, any principal roles granted that catalog role receive the privilege. In turn, any principals who are granted that principal role receive it.

A privilege can be scoped to any entity inside a catalog, including the catalog itself.

For a list of supported privileges for each privilege class, see the API docs:

This section provides information about how access control works for Apache Polaris (Incubating).

Polaris uses a role-based access control (RBAC) model in which the Polaris administrator assigns access privileges to catalog roles and then grants access to resources to service principals by assigning catalog roles to principal roles.

These are the key concepts to understanding access control in Polaris:

- Securable object

- Principal role

- Catalog role

- Privilege

A securable object is an object to which access can be granted. Polaris has the following securable objects:

- Catalog

- Namespace

- Iceberg table

- View

A principal role is a resource in Polaris that you can use to logically group Polaris service principals together and grant privileges on securable objects.

Polaris supports a many-to-one relationship between service principals and principal roles. For example, to grant the same privileges to multiple service principals, you can grant a single principal role to those service principals. A service principal can be granted one principal role. When registering a service connection, the Polaris administrator specifies the principal role that is granted to the service principal.

You don't grant privileges directly to a principal role. Instead, you configure object permissions at the catalog role level, and then grant catalog roles to a principal role.

The following table shows examples of principal roles that you might configure in Polaris:

| Principal role name | Description |

|---|---|

| Data_engineer | A role that is granted to multiple service principals for running data engineering jobs. |

| Data_scientist | A role that is granted to multiple service principals for running data science or AI jobs. |

A catalog role belongs to a particular catalog resource in Polaris and specifies a set of permissions for actions on the catalog or objects in the catalog, such as catalog namespaces or tables. You can create one or more catalog roles for a catalog.

You grant privileges to a catalog role and then grant the catalog role to a principal role to bestow the privileges to one or more service principals.

Note

If you update the privileges bestowed to a service principal, the updates won't take effect for up to one hour. This means that if you revoke or grant some privileges for a catalog, the updated privileges won't take effect on any service principal with access to that catalog for up to one hour.

Polaris also supports a many-to-many relationship between catalog roles and principal roles. You can grant the same catalog role to one or more principal roles. Likewise, a principal role can be granted to one or more catalog roles.

The following table displays examples of catalog roles that you might configure in Polaris:

| Example Catalog role | Description |

|---|---|

| Catalog administrators | A role that has been granted multiple privileges to emulate full access to the catalog. Principal roles that have been granted this role are permitted to create, alter, read, write, and drop tables in the catalog. |

| Catalog readers | A role that has been granted read-only privileges to tables in the catalog. Principal roles that have been granted this role are allowed to read from tables in the catalog. |

| Catalog contributor | A role that has been granted read and write access privileges to all tables that belong to the catalog. Principal roles that have been granted this role are allowed to perform read and write operations on tables in the catalog. |

The following diagram illustrates the RBAC model used by Polaris. For each catalog, the Polaris administrator assigns access privileges to catalog roles and then grants service principals access to resources by assigning catalog roles to principal roles. Polaris supports a many-to-one relationship between service principals and principal roles.

This section describes the privileges that are available in the Polaris access control model. Privileges are granted to catalog roles, catalog roles are granted to principal roles, and principal roles are granted to service principals to specify the operations that service principals can perform on objects in Polaris.

Important

You can only grant privileges at the catalog level. Fine-grained access controls are not available. For example, you can grant read privileges to all tables in a catalog but not to an individual table in the catalog.

To grant the full set of privileges (drop, list, read, write, etc.) on an object, you can use the full privilege option.

Table privileges

| Privilege | Description |

|---|---|

| TABLE_CREATE | Enables registering a table with the catalog. |

| TABLE_DROP | Enables dropping a table from the catalog. |

| TABLE_LIST | Enables listing any tables in the catalog. |

| TABLE_READ_PROPERTIES | Enables reading properties of the table. |

| TABLE_WRITE_PROPERTIES | Enables configuring properties for the table. |

| TABLE_READ_DATA | Enables reading data from the table by receiving short-lived read-only storage credentials from the catalog. |

| TABLE_WRITE_DATA | Enables writing data to the table by receiving short-lived read+write storage credentials from the catalog. |

| TABLE_FULL_METADATA | Grants all table privileges, except TABLE_READ_DATA and TABLE_WRITE_DATA, which need to be granted individually. |

View privileges

| Privilege | Description |

|---|---|

| VIEW_CREATE | Enables registering a view with the catalog. |

| VIEW_DROP | Enables dropping a view from the catalog. |

| VIEW_LIST | Enables listing any views in the catalog. |

| VIEW_READ_PROPERTIES | Enables reading all the view properties. |

| VIEW_WRITE_PROPERTIES | Enables configuring view properties. |

| VIEW_FULL_METADATA | Grants all view privileges. |

Namespace privileges

| Privilege | Description |

|---|---|

| NAMESPACE_CREATE | Enables creating a namespace in a catalog. |

| NAMESPACE_DROP | Enables dropping the namespace from the catalog. |

| NAMESPACE_LIST | Enables listing any object in the namespace, including nested namespaces and tables. |

| NAMESPACE_READ_PROPERTIES | Enables reading all the namespace properties. |

| NAMESPACE_WRITE_PROPERTIES | Enables configuring namespace properties. |

| NAMESPACE_FULL_METADATA | Grants all namespace privileges. |

Catalog privileges

| Privilege | Description |

|---|---|

| CATALOG_MANAGE_ACCESS | Includes the ability to grant or revoke privileges on objects in a catalog to catalog roles, and the ability to grant or revoke catalog roles to or from principal roles. |

| CATALOG_MANAGE_CONTENT | Enables full management of content for the catalog. This privilege encompasses the following privileges:

|

| CATALOG_MANAGE_METADATA | Enables full management of the catalog, catalog roles, namespaces, and tables. |

| CATALOG_READ_PROPERTIES | Enables listing catalogs and reading properties of the catalog. |

| CATALOG_WRITE_PROPERTIES | Enables configuring catalog properties. |

The following diagram illustrates how RBAC works in Polaris and includes the following users:

Alice: A service admin who signs up for Polaris. Alice can create service principals. She can also create catalogs and namespaces and configure access control for Polaris resources.

Bob: A data engineer who uses Apache Spark™ to interact with Polaris.

Alice has created a service principal for Bob. It has been granted the Data_engineer principal role, which in turn has been granted the following catalog roles: Catalog contributor and Data administrator (for both the Silver and Gold zone catalogs in the following diagram).

The Catalog contributor role grants permission to create namespaces and tables in the Bronze zone catalog.

The Data administrator roles grant full administrative rights to the Silver zone catalog and Gold zone catalog.

Mark: A data scientist who uses trains models with data managed by Polaris.

Alice has created a service principal for Mark. It has been granted the Data_scientist principal role, which in turn has been granted the catalog role named Catalog reader.

The Catalog reader role grants read-only access for a catalog named Gold zone catalog.

Configuring Apache Polaris (Incubating) for Production

The default polaris-server.yml configuration is intended for development and testing. When deploying Polaris in production, there are several best practices to keep in mind.

Security

Configurations

Notable configuration used to secure a Polaris deployment are outlined below.

oauth2

[!WARNING]

Ensure that thetokenBrokersetting reflects the token broker specified inauthenticatorbelow.

- Configure OAuth with this setting. Remove the

TestInlineBearerTokenPolarisAuthenticatoroption and uncomment theDefaultPolarisAuthenticatorauthenticator option beneath it. - Then, configure the token broker. You can configure the token broker to use either asymmetric or symmetric keys.

authenticator.tokenBroker

[!WARNING]

Ensure that thetokenBrokersetting reflects the token broker specified inoauth2above.

callContextResolver & realmContextResolver

- Use these configurations to specify a service that can resolve a realm from bearer tokens.

- The service(s) used here must implement the relevant interfaces (i.e. CallContextResolver and RealmContextResolver).

Metastore Management

[!IMPORTANT]

The defaultin-memoryimplementation formetastoreManageris meant for testing and not suitable for production usage. Instead, consider an implementation such aseclipse-linkwhich allows you to store metadata in a remote database.

A Metastore Manger should be configured with an implementation that durably persists Polaris entities. Use the configuration metaStoreManager to configure a MetastoreManager implementation where Polaris entities will be persisted.

Be sure to secure your metastore backend since it will be storing credentials and catalog metadata.

Configuring EclipseLink

To use EclipseLink for metastore management, specify the configuration metaStoreManager.conf-file to point to an EclipseLink persistence.xml file. This file, local to the Polaris service, contains details of the database used for metastore management and the connection settings. For more information, refer to metastore documentation for details.

Bootstrapping

Before using Polaris when using a metastore manager other than in-memory, you must bootstrap the metastore manager. This is a manual operation that must be performed only once in order to prepare the metastore manager to integrate with Polaris. When the metastore manager is bootstrapped, any existing Polaris entities in the metastore manager may be purged.

To bootstrap Polaris, run:

java -jar /path/to/jar/polaris-service-all.jar bootstrap polaris-server.yml

Afterwards, Polaris can be launched normally:

java -jar /path/to/jar/polaris-service-all.jar server polaris-server.yml

Other Configurations

When deploying Polaris in production, consider adjusting the following configurations:

featureConfiguration.SUPPORTED_CATALOG_STORAGE_TYPES

- By default Polaris catalogs are allowed to be located in local filesystem with the

FILEstorage type. This should be disabled for production systems. - Use this configuration to additionally disable any other storage types that will not be in use.

The default polaris-server.yml configuration is intended for development and testing. When deploying Polaris in production, there are several best practices to keep in mind.

Configurations

Notable configuration used to secure a Polaris deployment are outlined below.

oauth2

[!WARNING]

Ensure that thetokenBrokersetting reflects the token broker specified inauthenticatorbelow.

- Configure OAuth with this setting. Remove the

TestInlineBearerTokenPolarisAuthenticatoroption and uncomment theDefaultPolarisAuthenticatorauthenticator option beneath it. - Then, configure the token broker. You can configure the token broker to use either asymmetric or symmetric keys.

authenticator.tokenBroker

[!WARNING]

Ensure that thetokenBrokersetting reflects the token broker specified inoauth2above.

callContextResolver & realmContextResolver

- Use these configurations to specify a service that can resolve a realm from bearer tokens.

- The service(s) used here must implement the relevant interfaces (i.e. CallContextResolver and RealmContextResolver).

[!IMPORTANT]

The defaultin-memoryimplementation formetastoreManageris meant for testing and not suitable for production usage. Instead, consider an implementation such aseclipse-linkwhich allows you to store metadata in a remote database.

A Metastore Manger should be configured with an implementation that durably persists Polaris entities. Use the configuration metaStoreManager to configure a MetastoreManager implementation where Polaris entities will be persisted.

Be sure to secure your metastore backend since it will be storing credentials and catalog metadata.

Configuring EclipseLink

To use EclipseLink for metastore management, specify the configuration metaStoreManager.conf-file to point to an EclipseLink persistence.xml file. This file, local to the Polaris service, contains details of the database used for metastore management and the connection settings. For more information, refer to metastore documentation for details.

Bootstrapping

Before using Polaris when using a metastore manager other than in-memory, you must bootstrap the metastore manager. This is a manual operation that must be performed only once in order to prepare the metastore manager to integrate with Polaris. When the metastore manager is bootstrapped, any existing Polaris entities in the metastore manager may be purged.

To bootstrap Polaris, run:

java -jar /path/to/jar/polaris-service-all.jar bootstrap polaris-server.yml

Afterwards, Polaris can be launched normally:

java -jar /path/to/jar/polaris-service-all.jar server polaris-server.yml

When deploying Polaris in production, consider adjusting the following configurations:

featureConfiguration.SUPPORTED_CATALOG_STORAGE_TYPES

- By default Polaris catalogs are allowed to be located in local filesystem with the

FILEstorage type. This should be disabled for production systems. - Use this configuration to additionally disable any other storage types that will not be in use.

listCatalogs

List all catalogs in this polaris service

Authorizations:

Responses

Response samples

- 200

{- "catalogs": [

- {

- "type": "INTERNAL",

- "name": "string",

- "properties": {

- "default-base-location": "string",

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0,

- "storageConfigInfo": {

- "storageType": "S3",

- "allowedLocations": "For AWS [s3://bucketname/prefix/], for AZURE [abfss://container@storageaccount.blob.core.windows.net/prefix/], for GCP [gs://bucketname/prefix/]"

}

}

]

}createCatalog

Add a new Catalog

Authorizations:

Request Body schema: application/jsonrequired

The Catalog to create

required | object (Polaris_Management_Service_Catalog) A catalog object. A catalog may be internal or external. External catalogs are managed entirely by an external catalog interface. Third party catalogs may be other Iceberg REST implementations or other services with their own proprietary APIs |

Responses

Request samples

- Payload

{- "catalog": {

- "type": "INTERNAL",

- "name": "string",

- "properties": {

- "default-base-location": "string",

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0,

- "storageConfigInfo": {

- "storageType": "S3",

- "allowedLocations": "For AWS [s3://bucketname/prefix/], for AZURE [abfss://container@storageaccount.blob.core.windows.net/prefix/], for GCP [gs://bucketname/prefix/]"

}

}

}getCatalog

Get the details of a catalog

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog |

Responses

Response samples

- 200

{- "type": "INTERNAL",

- "name": "string",

- "properties": {

- "default-base-location": "string",

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0,

- "storageConfigInfo": {

- "storageType": "S3",

- "allowedLocations": "For AWS [s3://bucketname/prefix/], for AZURE [abfss://container@storageaccount.blob.core.windows.net/prefix/], for GCP [gs://bucketname/prefix/]"

}

}updateCatalog

Update an existing catalog

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog |

Request Body schema: application/jsonrequired

The catalog details to use in the update

| currentEntityVersion | integer The version of the object onto which this update is applied; if the object changed, the update will fail and the caller should retry after fetching the latest version. |

object | |

object (Polaris_Management_Service_StorageConfigInfo) A storage configuration used by catalogs |

Responses

Request samples

- Payload

{- "currentEntityVersion": 0,

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "storageConfigInfo": {

- "storageType": "S3",

- "allowedLocations": "For AWS [s3://bucketname/prefix/], for AZURE [abfss://container@storageaccount.blob.core.windows.net/prefix/], for GCP [gs://bucketname/prefix/]"

}

}Response samples

- 200

{- "type": "INTERNAL",

- "name": "string",

- "properties": {

- "default-base-location": "string",

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0,

- "storageConfigInfo": {

- "storageType": "S3",

- "allowedLocations": "For AWS [s3://bucketname/prefix/], for AZURE [abfss://container@storageaccount.blob.core.windows.net/prefix/], for GCP [gs://bucketname/prefix/]"

}

}listPrincipals

List the principals for the current catalog

Authorizations:

Responses

Response samples

- 200

{- "principals": [

- {

- "name": "string",

- "clientId": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

]

}createPrincipal

Create a principal

Authorizations:

Request Body schema: application/jsonrequired

The principal to create

object (Polaris_Management_Service_Principal) A Polaris principal. | |

| credentialRotationRequired | boolean If true, the initial credentials can only be used to call rotateCredentials |

Responses

Request samples

- Payload

{- "principal": {

- "name": "string",

- "clientId": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}, - "credentialRotationRequired": true

}Response samples

- 201

{- "principal": {

- "name": "string",

- "clientId": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}, - "credentials": {

- "clientId": "string",

- "clientSecret": "pa$$word"

}

}getPrincipal

Get the principal details

Authorizations:

path Parameters

| principalName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal name |

Responses

Response samples

- 200

{- "name": "string",

- "clientId": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}updatePrincipal

Update an existing principal

Authorizations:

path Parameters

| principalName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal name |

Request Body schema: application/jsonrequired

The principal details to use in the update

| currentEntityVersion required | integer The version of the object onto which this update is applied; if the object changed, the update will fail and the caller should retry after fetching the latest version. |

required | object |

Responses

Request samples

- Payload

{- "currentEntityVersion": 0,

- "properties": {

- "property1": "string",

- "property2": "string"

}

}Response samples

- 200

{- "name": "string",

- "clientId": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}rotateCredentials

Rotate a principal's credentials. The new credentials will be returned in the response. This is the only API, aside from createPrincipal, that returns the user's credentials. This API is not idempotent.

Authorizations:

path Parameters

| principalName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The user name |

Responses

Response samples

- 200

{- "principal": {

- "name": "string",

- "clientId": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}, - "credentials": {

- "clientId": "string",

- "clientSecret": "pa$$word"

}

}listPrincipalRolesAssigned

List the roles assigned to the principal

Authorizations:

path Parameters

| principalName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the target principal |

Responses

Response samples

- 200

{- "roles": [

- {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

]

}assignPrincipalRole

Add a role to the principal

Authorizations:

path Parameters

| principalName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the target principal |

Request Body schema: application/jsonrequired

The principal role to assign

object (Polaris_Management_Service_PrincipalRole) |

Responses

Request samples

- Payload

{- "principalRole": {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

}revokePrincipalRole

Remove a role from a catalog principal

Authorizations:

path Parameters

| principalName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the target principal |

| principalRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the role |

Responses

listPrincipalRoles

List the principal roles

Authorizations:

Responses

Response samples

- 200

{- "roles": [

- {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

]

}createPrincipalRole

Create a principal role

Authorizations:

Request Body schema: application/jsonrequired

The principal to create

object (Polaris_Management_Service_PrincipalRole) |

Responses

Request samples

- Payload

{- "principalRole": {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

}getPrincipalRole

Get the principal role details

Authorizations:

path Parameters

| principalRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal role name |

Responses

Response samples

- 200

{- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}updatePrincipalRole

Update an existing principalRole

Authorizations:

path Parameters

| principalRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal role name |

Request Body schema: application/jsonrequired

The principalRole details to use in the update

| currentEntityVersion required | integer The version of the object onto which this update is applied; if the object changed, the update will fail and the caller should retry after fetching the latest version. |

required | object |

Responses

Request samples

- Payload

{- "currentEntityVersion": 0,

- "properties": {

- "property1": "string",

- "property2": "string"

}

}Response samples

- 200

{- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}listAssigneePrincipalsForPrincipalRole

List the Principals to whom the target principal role has been assigned

Authorizations:

path Parameters

| principalRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal role name |

Responses

Response samples

- 200

{- "principals": [

- {

- "name": "string",

- "clientId": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

]

}listCatalogRolesForPrincipalRole

Get the catalog roles mapped to the principal role

Authorizations:

path Parameters

| principalRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal role name |

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog where the catalogRoles reside |

Responses

Response samples

- 200

{- "roles": [

- {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

]

}assignCatalogRoleToPrincipalRole

Assign a catalog role to a principal role

Authorizations:

path Parameters

| principalRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal role name |

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog where the catalogRoles reside |

Request Body schema: application/jsonrequired

The principal to create

object (Polaris_Management_Service_CatalogRole) |

Responses

Request samples

- Payload

{- "catalogRole": {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

}revokeCatalogRoleFromPrincipalRole

Remove a catalog role from a principal role

Authorizations:

path Parameters

| principalRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The principal role name |

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog that contains the role to revoke |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog role that should be revoked |

Responses

listCatalogRoles

List existing roles in the catalog

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The catalog for which we are reading/updating roles |

Responses

Response samples

- 200

{- "roles": [

- {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

]

}createCatalogRole

Create a new role in the catalog

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The catalog for which we are reading/updating roles |

Request Body schema: application/json

object (Polaris_Management_Service_CatalogRole) |

Responses

Request samples

- Payload

{- "catalogRole": {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

}getCatalogRole

Get the details of an existing role

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The catalog for which we are retrieving roles |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the role |

Responses

Response samples

- 200

{- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}updateCatalogRole

Update an existing role in the catalog

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The catalog for which we are retrieving roles |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the role |

Request Body schema: application/json

| currentEntityVersion required | integer The version of the object onto which this update is applied; if the object changed, the update will fail and the caller should retry after fetching the latest version. |

required | object |

Responses

Request samples

- Payload

{- "currentEntityVersion": 0,

- "properties": {

- "property1": "string",

- "property2": "string"

}

}Response samples

- 200

{- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}deleteCatalogRole

Delete an existing role from the catalog. All associated grants will also be deleted

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The catalog for which we are retrieving roles |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the role |

Responses

listAssigneePrincipalRolesForCatalogRole

List the PrincipalRoles to which the target catalog role has been assigned

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog where the catalog role resides |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog role |

Responses

Response samples

- 200

{- "roles": [

- {

- "name": "string",

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "createTimestamp": 0,

- "lastUpdateTimestamp": 0,

- "entityVersion": 0

}

]

}listGrantsForCatalogRole

List the grants the catalog role holds

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog where the role will receive the grant |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the role receiving the grant (must exist) |

Responses

Response samples

- 200

{- "grants": [

- {

- "type": "catalog"

}

]

}addGrantToCatalogRole

Add a new grant to the catalog role

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog where the role will receive the grant |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the role receiving the grant (must exist) |

Request Body schema: application/json

object (Polaris_Management_Service_GrantResource) |

Responses

Request samples

- Payload

{- "grant": {

- "type": "catalog"

}

}revokeGrantFromCatalogRole

Delete a specific grant from the role. This may be a subset or a superset of the grants the role has. In case of a subset, the role will retain the grants not specified. If the cascade parameter is true, grant revocation will have a cascading effect - that is, if a principal has specific grants on a subresource, and grants are revoked on a parent resource, the grants present on the subresource will be revoked as well. By default, this behavior is disabled and grant revocation only affects the specified resource.

Authorizations:

path Parameters

| catalogName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the catalog where the role will receive the grant |

| catalogRoleName required | string [ 1 .. 256 ] characters ^(?!\s*[s|S][y|Y][s|S][t|T][e|E][m|M]\$).*$ The name of the role receiving the grant (must exist) |

query Parameters

| cascade | boolean Default: false If true, the grant revocation cascades to all subresources. |

Request Body schema: application/json

object (Polaris_Management_Service_GrantResource) |

Responses

Request samples

- Payload

{- "grant": {

- "type": "catalog"

}

}List all catalog configuration settings

All REST clients should first call this route to get catalog configuration properties from the server to configure the catalog and its HTTP client. Configuration from the server consists of two sets of key/value pairs.

- defaults - properties that should be used as default configuration; applied before client configuration

- overrides - properties that should be used to override client configuration; applied after defaults and client configuration

Catalog configuration is constructed by setting the defaults, then client- provided configuration, and finally overrides. The final property set is then used to configure the catalog.

For example, a default configuration property might set the size of the client pool, which can be replaced with a client-specific setting. An override might be used to set the warehouse location, which is stored on the server rather than in client configuration.

Common catalog configuration settings are documented at https://iceberg.apache.org/docs/latest/configuration/#catalog-properties

Authorizations:

query Parameters

| warehouse | string Warehouse location or identifier to request from the service |

Responses

Response samples

- 200

- 400

- 401

- 403

- 419

- 503

- 5XX

{- "overrides": {

- "warehouse": "s3://bucket/warehouse/"

}, - "defaults": {

- "clients": "4"

}

}Get a token using an OAuth2 flow

Exchange credentials for a token using the OAuth2 client credentials flow or token exchange.

This endpoint is used for three purposes -

- To exchange client credentials (client ID and secret) for an access token This uses the client credentials flow.

- To exchange a client token and an identity token for a more specific access token This uses the token exchange flow.

- To exchange an access token for one with the same claims and a refreshed expiration period This uses the token exchange flow.

For example, a catalog client may be configured with client credentials from the OAuth2 Authorization flow. This client would exchange its client ID and secret for an access token using the client credentials request with this endpoint (1). Subsequent requests would then use that access token.

Some clients may also handle sessions that have additional user context. These clients would use the token exchange flow to exchange a user token (the "subject" token) from the session for a more specific access token for that user, using the catalog's access token as the "actor" token (2). The user ID token is the "subject" token and can be any token type allowed by the OAuth2 token exchange flow, including a unsecured JWT token with a sub claim. This request should use the catalog's bearer token in the "Authorization" header.

Clients may also use the token exchange flow to refresh a token that is about to expire by sending a token exchange request (3). The request's "subject" token should be the expiring token. This request should use the subject token in the "Authorization" header.

Authorizations:

header Parameters

| Authorization | string |

Request Body schema: application/x-www-form-urlencodedrequired

| grant_type required | string Value: "client_credentials" |

| scope | string |

| client_id required | string Client ID This can be sent in the request body, but OAuth2 recommends sending it in a Basic Authorization header. |

| client_secret required | string Client secret This can be sent in the request body, but OAuth2 recommends sending it in a Basic Authorization header. |

Responses

Response samples

- 200

- 400

- 401

- 5XX

{- "access_token": "string",

- "token_type": "bearer",

- "expires_in": 0,

- "issued_token_type": "urn:ietf:params:oauth:token-type:access_token",

- "refresh_token": "string",

- "scope": "string"

}List namespaces, optionally providing a parent namespace to list underneath

List all namespaces at a certain level, optionally starting from a given parent namespace. If table accounting.tax.paid.info exists, using 'SELECT NAMESPACE IN accounting' would translate into GET /namespaces?parent=accounting and must return a namespace, ["accounting", "tax"] only. Using 'SELECT NAMESPACE IN accounting.tax' would translate into GET /namespaces?parent=accounting%1Ftax and must return a namespace, ["accounting", "tax", "paid"]. If parent is not provided, all top-level namespaces should be listed.

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

query Parameters

| pageToken | string or null (Apache_Iceberg_REST_Catalog_API_PageToken) An opaque token that allows clients to make use of pagination for list APIs (e.g. ListTables). Clients may initiate the first paginated request by sending an empty query parameter |

| pageSize | integer >= 1 For servers that support pagination, this signals an upper bound of the number of results that a client will receive. For servers that do not support pagination, clients may receive results larger than the indicated |

| parent | string Example: parent=accounting%1Ftax An optional namespace, underneath which to list namespaces. If not provided or empty, all top-level namespaces should be listed. If parent is a multipart namespace, the parts must be separated by the unit separator ( |

Responses

Response samples

- 200

- 400

- 401

- 403

- 404

- 419

- 503

- 5XX

{- "namespaces": [

- [

- "accounting",

- "tax"

], - [

- "accounting",

- "credits"

]

]

}Create a namespace

Create a namespace, with an optional set of properties. The server might also add properties, such as last_modified_time etc.

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

Request Body schema: application/jsonrequired

| namespace required | Array of strings (Apache_Iceberg_REST_Catalog_API_Namespace) Reference to one or more levels of a namespace |

object Default: {} Configured string to string map of properties for the namespace |

Responses

Request samples

- Payload

{- "namespace": [

- "accounting",

- "tax"

], - "properties": {

- "owner": "Hank Bendickson"

}

}Response samples

- 200

- 400

- 401

- 403

- 406

- 409

- 419

- 503

- 5XX

{- "namespace": [

- "accounting",

- "tax"

], - "properties": {

- "owner": "Ralph",

- "created_at": "1452120468"

}

}Load the metadata properties for a namespace

Return all stored metadata properties for a given namespace

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

| namespace required | string Examples:

A namespace identifier as a single string. Multipart namespace parts should be separated by the unit separator ( |

Responses

Response samples

- 200

- 400

- 401

- 403

- 404

- 419

- 503

- 5XX

{- "namespace": [

- "accounting",

- "tax"

], - "properties": {

- "owner": "Ralph",

- "transient_lastDdlTime": "1452120468"

}

}Check if a namespace exists

Check if a namespace exists. The response does not contain a body.

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

| namespace required | string Examples:

A namespace identifier as a single string. Multipart namespace parts should be separated by the unit separator ( |

Responses

Response samples

- 400

- 401

- 403

- 404

- 419

- 503

- 5XX

{- "error": {

- "message": "Malformed request",

- "type": "BadRequestException",

- "code": 400

}

}Drop a namespace from the catalog. Namespace must be empty.

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

| namespace required | string Examples:

A namespace identifier as a single string. Multipart namespace parts should be separated by the unit separator ( |

Responses

Response samples

- 400

- 401

- 403

- 404

- 419

- 503

- 5XX

{- "error": {

- "message": "Malformed request",

- "type": "BadRequestException",

- "code": 400

}

}Set or remove properties on a namespace

Set and/or remove properties on a namespace. The request body specifies a list of properties to remove and a map of key value pairs to update. Properties that are not in the request are not modified or removed by this call. Server implementations are not required to support namespace properties.

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

| namespace required | string Examples:

A namespace identifier as a single string. Multipart namespace parts should be separated by the unit separator ( |

Request Body schema: application/jsonrequired

| removals | Array of strings unique |

object |

Responses

Request samples

- Payload

{- "removals": [

- "foo",

- "bar"

], - "updates": {

- "owner": "Raoul"

}

}Response samples

- 200

- 400

- 401

- 403

- 404

- 406

- 419

- 422

- 503

- 5XX

{- "updated": [

- "owner"

], - "removed": [

- "foo"

], - "missing": [

- "bar"

]

}List all table identifiers underneath a given namespace

Return all table identifiers under this namespace

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

| namespace required | string Examples:

A namespace identifier as a single string. Multipart namespace parts should be separated by the unit separator ( |

query Parameters

| pageToken | string or null (Apache_Iceberg_REST_Catalog_API_PageToken) An opaque token that allows clients to make use of pagination for list APIs (e.g. ListTables). Clients may initiate the first paginated request by sending an empty query parameter |

| pageSize | integer >= 1 For servers that support pagination, this signals an upper bound of the number of results that a client will receive. For servers that do not support pagination, clients may receive results larger than the indicated |

Responses

Response samples

- 200

- 400

- 401

- 403

- 404

- 419

- 503

- 5XX

{- "identifiers": [

- {

- "namespace": [

- "accounting",

- "tax"

], - "name": "paid"

}, - {

- "namespace": [

- "accounting",

- "tax"

], - "name": "owed"

}

]

}Create a table in the given namespace

Create a table or start a create transaction, like atomic CTAS.

If stage-create is false, the table is created immediately.

If stage-create is true, the table is not created, but table metadata is initialized and returned. The service should prepare as needed for a commit to the table commit endpoint to complete the create transaction. The client uses the returned metadata to begin a transaction. To commit the transaction, the client sends all create and subsequent changes to the table commit route. Changes from the table create operation include changes like AddSchemaUpdate and SetCurrentSchemaUpdate that set the initial table state.

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

| namespace required | string Examples:

A namespace identifier as a single string. Multipart namespace parts should be separated by the unit separator ( |

header Parameters

| X-Iceberg-Access-Delegation | string Enum: "vended-credentials" "remote-signing" Example: vended-credentials,remote-signing Optional signal to the server that the client supports delegated access via a comma-separated list of access mechanisms. The server may choose to supply access via any or none of the requested mechanisms. Specific properties and handling for The protocol and specification for |

Request Body schema: application/jsonrequired

| name required | string |

| location | string |

required | object (Apache_Iceberg_REST_Catalog_API_Schema) |

object (Apache_Iceberg_REST_Catalog_API_PartitionSpec) | |

object (Apache_Iceberg_REST_Catalog_API_SortOrder) | |

| stage-create | boolean |

object |

Responses

Request samples

- Payload

{- "name": "string",

- "location": "string",

- "schema": {

- "type": "struct",

- "fields": [

- {

- "id": 0,

- "name": "string",

- "type": [

- "long",

- "string",

- "fixed[16]",

- "decimal(10,2)"

], - "required": true,

- "doc": "string"

}

], - "identifier-field-ids": [

- 0

]

}, - "partition-spec": {

- "fields": [

- {

- "field-id": 0,

- "source-id": 0,

- "name": "string",

- "transform": [

- "identity",

- "year",

- "month",

- "day",

- "hour",

- "bucket[256]",

- "truncate[16]"

]

}

]

}, - "write-order": {

- "fields": [

- {

- "source-id": 0,

- "transform": [

- "identity",

- "year",

- "month",

- "day",

- "hour",

- "bucket[256]",

- "truncate[16]"

], - "direction": "asc",

- "null-order": "nulls-first"

}

]

}, - "stage-create": true,

- "properties": {

- "property1": "string",

- "property2": "string"

}

}Response samples

- 200

- 400

- 401

- 403

- 404

- 409

- 419

- 503

- 5XX

{- "metadata-location": "string",

- "metadata": {

- "format-version": 1,

- "table-uuid": "string",

- "location": "string",

- "last-updated-ms": 0,

- "properties": {

- "property1": "string",

- "property2": "string"

}, - "schemas": [

- {

- "type": "struct",

- "fields": [

- {

- "id": 0,

- "name": "string",

- "type": [

- "long",

- "string",

- "fixed[16]",

- "decimal(10,2)"

], - "required": true,

- "doc": "string"

}

], - "schema-id": 0,

- "identifier-field-ids": [

- 0

]

}

], - "current-schema-id": 0,

- "last-column-id": 0,

- "partition-specs": [

- {

- "spec-id": 0,

- "fields": [

- {

- "field-id": 0,

- "source-id": 0,

- "name": "string",

- "transform": [

- "identity",

- "year",

- "month",

- "day",

- "hour",

- "bucket[256]",

- "truncate[16]"

]

}

]

}

], - "default-spec-id": 0,

- "last-partition-id": 0,

- "sort-orders": [

- {

- "order-id": 0,

- "fields": [

- {

- "source-id": 0,

- "transform": [

- "identity",

- "year",

- "month",

- "day",

- "hour",

- "bucket[256]",

- "truncate[16]"

], - "direction": "asc",

- "null-order": "nulls-first"

}

]

}

], - "default-sort-order-id": 0,

- "snapshots": [

- {

- "snapshot-id": 0,

- "parent-snapshot-id": 0,

- "sequence-number": 0,

- "timestamp-ms": 0,

- "manifest-list": "string",

- "summary": {

- "operation": "append",

- "property1": "string",

- "property2": "string"

}, - "schema-id": 0

}

], - "refs": {

- "property1": {

- "type": "tag",

- "snapshot-id": 0,

- "max-ref-age-ms": 0,

- "max-snapshot-age-ms": 0,

- "min-snapshots-to-keep": 0

}, - "property2": {

- "type": "tag",

- "snapshot-id": 0,

- "max-ref-age-ms": 0,

- "max-snapshot-age-ms": 0,

- "min-snapshots-to-keep": 0

}

}, - "current-snapshot-id": 0,

- "last-sequence-number": 0,

- "snapshot-log": [

- {

- "snapshot-id": 0,

- "timestamp-ms": 0

}

], - "metadata-log": [

- {

- "metadata-file": "string",

- "timestamp-ms": 0

}

], - "statistics-files": [

- {

- "snapshot-id": 0,

- "statistics-path": "string",

- "file-size-in-bytes": 0,

- "file-footer-size-in-bytes": 0,

- "blob-metadata": [

- {

- "type": "string",

- "snapshot-id": 0,

- "sequence-number": 0,

- "fields": [

- 0

], - "properties": { }

}

]

}

], - "partition-statistics-files": [

- {

- "snapshot-id": 0,

- "statistics-path": "string",

- "file-size-in-bytes": 0

}

]

}, - "config": {

- "property1": "string",

- "property2": "string"

}

}Register a table in the given namespace using given metadata file location

Register a table using given metadata file location.

Authorizations:

path Parameters

| prefix required | string An optional prefix in the path |

| namespace required | string Examples:

A namespace identifier as a single string. Multipart namespace parts should be separated by the unit separator ( |